Risk

Management and Regulation

Dr. Andrew M. Street

Abstract

This paper examines financial risk management from the

regulatory perspective. It considers quantitative and qualitative aspects of

risk management and how a regulator can view these. It considers such risk

management problems as model errors and inadequate internal controls and how

they may be dealt with from both the firm’s and regulator’s perspective. It

discusses the interaction between capital, risk management and internal controls

from a regulatory perspective.

1.

Introduction

For

good or for bad, the financial industry in Europe and most of the rest of the

world is regulated by some official body or other. In some cases a firm (be it a

bank, a securities house, CTA, investment bank or trading house) my be subject

to many regulations and multiple regulatory oversight, does all this regulation

actually do any good? Does it contribute to a safer and better world? Does it at

least push firms into better operating practices, not only for the public at

large but also for themselves?

What

I hope to be able to do in this paper is to give a small insight into how a

regulator might view a firm, and in particular that firm’s risk management

process, since the taking and managing of risk is at the very core of the

business activity. Not least

because for too long regulation has been seen by some as the enemy of common

sense and I hope that the following paper may go some way to redress the

balance.

It

should come as no surprise to anyone that regulators rank the firms they

regulate and focus resources on those firms that do badly. SFA has a ranking

scheme called “FIBSPAM”. It should also come as no surprise that the risk

management grading within the score has a heavy weighting, and is partially

graded by risk management specialists within SFA. Further it should come as no

surprise that highly rated firms are treated differently from their less

illustrious brethren in the form of a less intrusive visit cycle and the ease

with which they gain capital concessions. If this does come as a surprise to

you, I hope the following will help to explain why it should not.

2.

The Risk Management Process

Firms

with good risk management are deemed much less likely to fail or become a

regulatory embarrassment. Risk

management in this context is a complex and multi-dimensional process.

There are many aspects of risk within a business enterprise,

notwithstanding the better known ones of market risk, credit risk, operational

risk, liquidity risk and fraud risk. Error

or mishandling of any of these may lead to embarrassment in the press and

therefore perhaps a reduction in business profile at the very least, or at the

worst, to the failure of a firm through the breakdown of some vital internal

control. Good risk management is

therefore a form of foreseeing and limiting potential problems and in its very

essence makes for sound business practice.

It is only when good risk management breaks down and the problem becomes

unmanageable that reliance on insurance in the form of equity (or subordinated

loan) capital comes into play. Therefore

capital by itself is not a substitute for adequate risk management; it acts as a

back-stop or last ditch defence.

Someone

once said that trying to describe good risk management was like trying to

describe a giraffe to someone who had never seen one.

It is difficult to imagine what it looks like but you know it when you

see it. In general, the inspections

and checks that regulators carry out on regulated firms are not only to check

statutory compliance with rules and regulations but also to investigate the

adequacy of internal controls and therein the whole risk management process.

It is certainly the case among more progressive regulators that

indications that a firm is well controlled lead to less intrusive and less

frequent investigation by the regulator and thus a lighter regulatory burden as

partial reward for being a well risk-managed.

The

process of risk management can be viewed as two-stage.

The first stage is about identifying risk within the business process and

if possible quantifying its magnitude and sources.

The second aspect is about setting limits and parameters into which the

risk is constrained and controlled, in order to meet the goals of the business

with a sensible and considered risk profile.

So in order to be successful in risk management it is important not only

to identify and measure but also to limit and control. In looking at a firm’s

risk management a regulator will therefore view how a firm and its management

use its internal controls in order to achieve these twin aspects of risk

management. As with any control

structure, it is expected there will be quality control mechanisms such as

feedback loops and spot checks as a way of ensuring that the system is in fact

functioning properly and allow the timely detection of errors.

Some

elements of risk management will be highly quantitative in the sense that the

risk may be sensibly represented by numbers generated from quantitative methods

such as probability or arbitrage pricing theory.

In other aspects of risk management the risk may not lend itself easily

to quantification (due to lack of data or complex parameterisation) but will

require qualitative or comparative types of measurement and control.

Thus some market risks with a wide availability of extensive data and a

good deal of sophisticated analysis and option theory lends itself very readily

to quantification of risk in numerical terms (however one does need to wary of

placing too much faith in theory, especially as risk number are frequently

quoted to an unfeasible large number of significant figures and without error

estimates!) . But the

quantification of operational risk per se is difficult, and indeed many aspects

of these types of risk have more to do with interaction of humans and the

dependence on or criticality of certain key roles or individuals within an

organisation. However, just because

a risk cannot be readily expressed as a number does not mean that it does not

exist nor that it cannot be managed. Indeed

many of the solutions in operational risk areas involve a system of dual

control, or alternatively some process of review and a negative feedback loop,

so that a lack of adequate review will in fact automatically trigger an event

that has to be acted upon. One of

the hardest elements to quantify within an organisation is the effect of

political struggles or a person’s career aspirations within that organisation

which may lead to an individual’s actions, which are not within the best

interests of a successful firm wide risk management framework.

These types of risk must be taken into account in looking at the risk

management of a firm.

We

focus for a moment on market risk as one of the more complete areas of the risk

management framework; that is from the point of view of both qualitative and

quantitative standards and techniques. The

basic risk management necessity here is to identify the sources of market risk

that a firm is prone to, and analyse how that risk profile may evolve over time

in terms of profit and loss variations, such that the possible and potential

profit and loss variations are within the framework of the organisation’s

aspirations in terms of risk and return and overall financial stability and

longevity.

The

cornerstone of this market risk management operation is clearly the data. This

means data not only in the form of position information, i.e. accurately

describing the type of obligation or contract or risk entered into both from the

long side and the short side, but also information for the mark to market

process: for example FX or equity

spot prices, yield curves and interest rate volatility curves.

The whole risk management process will therefore hinge on the quality and

adequacy of the data that is fed into the quantitative aspects of the market

risk management process. Clearly,

things can go very wrong here if not all the information is captured or if the

information which is fed into the system comes from a dubious source; for

example, from the front office area with the perspective of a trader wishing to

manipulate positions for his own ends, or from a market which has very little

price transparency and perhaps is illiquid in some sense. It is therefore

important within market risk management as far as possible to ensure, even at

the first level, that the information that is feeding into the risk management

system is both as complete and accurate as possible and that some effort is made

to quantify any errors.

In

any real world situation there will be uncertainty, and this needs to be

quantified if possible and monitored on an ongoing basis.

The importance of this process cannot be over-estimated, and indeed it is

dangerous to underestimate the thoroughness and accuracy with which market

parameters need to be determined and verified by a firm for a successful risk

management process. Indeed a comparatively large amount of conflict between

firms and their regulators stem from the mark to market process and also from

the representations of risk within the risk management framework. It is not

uncommon in areas where firms deal in products that are difficult to mark to

market due to complexity or illiquidity to rely upon the “in house” expert,

who is generally the trader and who naturally has a vested interest in the

P&L (particularly the P rather than the L!).

In most organisations the P&L is a major direct factor in determining

the overall compensation of the trader or risk taker.

His involvement therefore in the determination of the P&L has to be

strictly controlled and monitored, as history has demonstrated to us many times.

There is an overwhelming temptation to make things look better than

someone who is more independent might assess them to be. The risk to the firm is

not only that of being duped into paying more than is fair but also that the

firm’s risk capital base is over estimated and it believes it has more capital

buffer than it really has, possibly even falling below the minimum capital

requirements of its regulators. Clearly this has ramifications for systemic

risk.

Similarly,

in the area of new products, the development of new risk types (for example:

external barrier option or credit derivatives) puts an onus on the existing risk

management system to keep an adequate book and adequately represent its risks

within a current framework. This

can lead to situations where deals are booked outside the main system and become

what are known as “NIS” or Not In System trades.

These are a particular area of concern to regulators, as generally

speaking they do not have the same level of control and oversight that other

more traditional areas of the business would have.

From a regulatory point of view and indeed from a good business point of

view it is therefore necessary to control the way that new products and new

risks are brought into the firm. Indeed

the control or degree of discretion that self-interested individuals have in the

profits should be managed sensibly and reviewed by people who can be more

objective.

Once

the information is in the system we of course need to be sure that the system

performs its task according to its design.

The testing and implementation of a system is thus an important part of

establishing a good risk management framework, as is the security of the source

code in the existing system and further releases of that system. The systems

themselves will have undergone extensive testing, not only from a functionality

viewpoint but also from the viewpoint of verification of the task or processing

that they perform. Most of these

systems operate on a database with an algorithm/model that performs some sort of

processing to generate risk management numbers. The resulting number is

frequently stored back in the database. Thus the ability to sort information

from the database and to aggregate that information so as to give a complete

breakdown of the risk subject to different queries is an essential feature of

the risk management system.

It

is probably worth pausing at this point when considering the regulator's view of

risk management just to consider the core values on which this view is based.

They will serve to reinforce the points made above. In particular the following

are the core values of the Securities & Futures Authority

and are as follows:

Item

1

Detailed rules create an unsatisfactory capital regime.

That is to say complex line-by-line rules are a very easy way of

creating opportunities for people to take risk without putting up capital

against that risk. The most

effective way is to have a principal of capital against risk rather than a

line-by-line complex rule.

Item

2

Capital is not a substitute for effective risk management.

This is because if one does not measure and control risk it is possible

to lose any amount of capital excess and drive the firm into jeopardy. Capital

is back-stop insurance to the risk management process.

Item

3

Financial institutions are responsible for their own health.

It is not the regulator's responsibility to prevent individual firms

from going bankrupt. The

regulator's responsibility is to the non-professional investors and to the

health of the financial system as a whole rather than to individual regulated

organisations. Firms are in the business to take risk for reward and as a

consequence of taking that risk may go bankrupt and more importantly should be

allowed to. As Dr Alan Greenspan, Chairman of the Federal Reserve Board once

said “the optimal number of bank failures is not zero”.

Item

4

Level playing fields are an unnecessary impediment to effective

supervision. The Basle and then

the CAD paper were created in order to standardise and create the possibility

of a level playing field between financial institutions in Europe.

But the actual supervision of a particular entity is not predicated on,

nor is it necessary for effective supervision, for it to have exactly the same

standing with regard to risk capital as its competitors. It is therefore not

necessary for a supervisor as a matter of priority to ensure a level playing

field. However, generally

speaking the legislation provides for a level playing field, and as part of

the supervisory activity it is administered in a way that a level playing

field is created as far as is prudent.

In

the core values stated above (item 2) it was affirmed that capital cannot be a

substitute for good risk management. As

stated, it is SFA’s belief that good risk management cannot be replaced by

capital since the size of potential losses, if they cannot be measured or

contained, could be large enough to absorb any amount of capital excess and

drive the firm into insolvency. Basically

speaking, if you cannot gauge it (i.e. the risk)

or limit it, you have no

control over it, and it can overcome the firm’s financial resources.

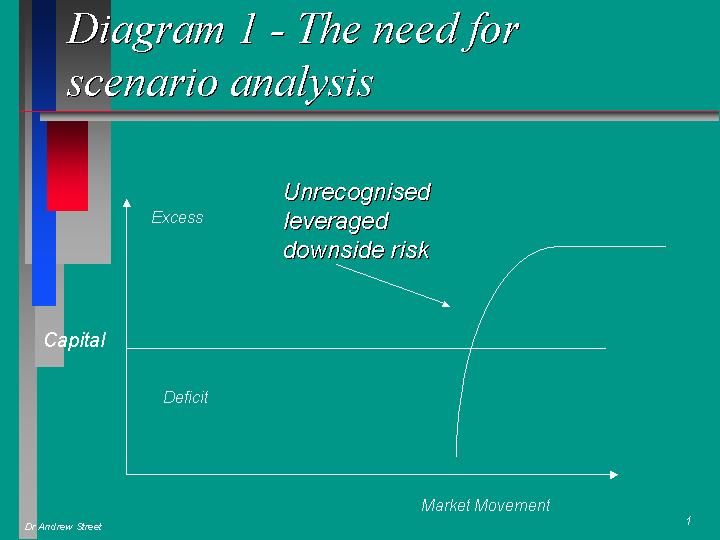

This is particularly true for leverage portfolios of derivatives, for

example, where very large changes in the value of the portfolio can occur over

very small movements in the market. Let

us consider for example a portfolio of derivatives containing a number of

Diagram 1

barrier

options of the type called “up and out” call options.

It is possible to obtain a very large change in value that occurs rapidly

over a very small movement in the underlying market.

A risk management system looking at the situation purely from a

sensitivity analysis (i.e. the Greeks delta, gamma etc.) approach would

completely fail to miss the potential pitfall if the market moves a few points

away from the current spot levels. Therefore

it would be vital with this type of risk to do scenario analysis (portfolio

revaluation over a range of underlying movements) in order to establish the

potential loss for a range of market movements away from the current spot

levels. In just the same way as risk management cannot not be performed from the

P&L (since it is retrospective and like trying to drive a car forward whilst

looking in the rear view mirror), path dependent risk is best considered by

doing scenarios rather than static risk measures.

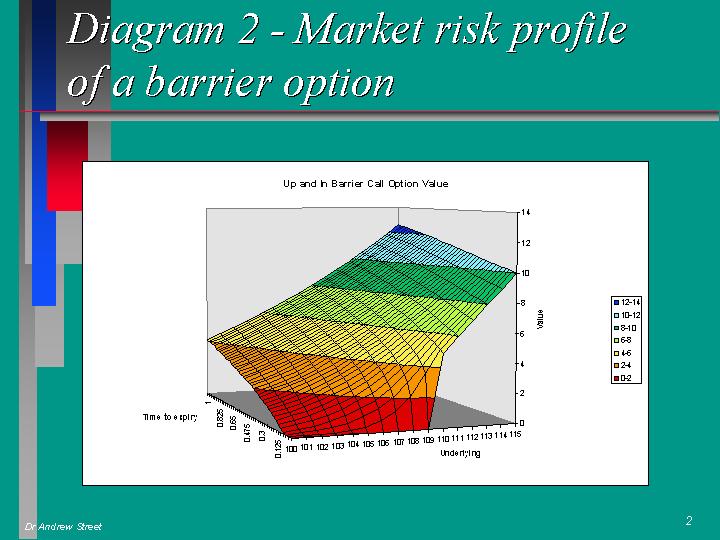

Diagram 2

We

have considered market risk as a particular case for risk management.

As I have previously mentioned other risks include counterparty risk,

operational risk, legal risk, “reputational risk”, liquidity risk, fraud

risk, funding risk and many other forms of risk.

SFA’s

prime concern with risk in general within regulated firms is the protection of

investors and the prevention of systemic meltdown.

Practically speaking this means making sure that adequate risk management

across all risks exists within a firm as a form of accident prevention and that

maintenance of capital as a form of insurance in the case of a particular crisis

is enforced.

Focusing

once again on market risk, we can define it as the quantification of the change

in value of assets and liabilities as markets move.

In the risk management process we need to value assets and liabilities.

This is usually achieved via the mark to market.

In marking to market there needs to be adjustment not only for the

bid/offer (bid/ask) spread but also for the liquidity (market size / quote) in

that particular market at that particular time.

Liquidity can be considered as the market size (notional) that a

transaction may be undertaken in, at the particular prices that the bid and

offer are quoted at that time. Thus

liquidity is dynamic and is a function of market volatility such that in liquid

markets in normal times comparatively large transactions may be undertaken at

the bid or offer price with relatively little market impact.

In times of market uncertainty, however, such as after a crash or during

economic turmoil (such as in SE Asia in late 1997), it may be impossible to deal

in anything like normal market size at the indicated price.

The models that are used to create the standardised risk profile of the

derivatives contracts are, as stated before, only an approximation to reality

and frequently contain assumptions that may not always hold. A key assumption

that is frequently made concerns the ability to construct a replicating

portfolio and the costs involved in rebalancing such a portfolio - clearly

illiquid markets severely test such assumptions and call into question any

valuations derived in this manner.

3.

Model Error

The

risk management system, as well as containing a database and processing engine,

will invariably contain some kind of financial model.

This model could be, for example, an option model, discounted cash flow

model or a convertible bond model. In

the case of a swap the model is used to process the particular form of contract

(schedule of cash flows) into a

present value (PV) and to examine the sensitivity (DV01) of the present values

to changes in market parameters such as the 1y or 5y

interest rate. Most models are of course based on simplifying

assumptions. For example, many

option models are based on arbitrage pricing theory, which in a Black-Scholes

type approach assumes that underlying market prices are pure geometric random

walk processes with zero bid/offer spread and infinite liquidity.

These simplifying assumptions allow the construction of a replicating

portfolio, which by arbitrage arguments has a total replication cost equal to

the value of the option or derivatives contracts to be valued.

If any of the assumptions are in practice seriously challenged, then the

valuation that these models give can be very far from the actual replication or

hedging costs that would be incurred in attempting to risk-manage the particular

product or risk profile given by the model. Sensible valuation may only be

possible by realistic hedge simulations using Monte-Carlo methods for example.

One

needs to consider the adequacy of the particular modelling approach not only

with regard to the particular type of risk (e.g. put option) but also for which

underlying market that risk profile is actually to be underwritten.

It is perfectly reasonable, for example, for a model to be used for a

liquid short dated FX (foreign exchange) option, say, Garman-Kolhagen which

would give results for valuation and sensitivity for risk management purposes

that were perfectly acceptable; whereas the same model perhaps applied to a

long-dated option on individual illiquid equity (say in Korea for example) may

in fact seriously and erroneously ascribe a value to the risk which is perhaps

much lower than a more considered and prudent approach.

Thus a good risk management framework will give serious consideration to

the applicability of particular modelling approaches to types of risk, and will

restrict models on the basis of the type of risk to be modelled.

In order to model other risks it may be necessary to employ a different

modelling technique, or to use an existing model but to make an estimate of the

possible error in valuation and sensitivities that may be obtained using that

particular model. Techniques such

as Monte Carlo simulation can be used in order to assess this 'model error'.

It needs to be stressed that model error is not an error in the

algorithm, or the implementation of the particular algorithm, but is the error

of the particular modelling approach in relation to the real world situation

that is being assessed.

It

is not unusual that models used within organisations actually contain

conflicting assumptions on underlying processes.

An example of this would be the use of the Black type model for the

evaluation of bond options (where prices are random walks) and the Black model

for the evaluation of swaptions (or swap options) (where a random walk of the

underlying yield is taken as the stochastic process).

In both cases log normality of returns is assumed and it is impossible to

have a lognormal price return whilst simultaneously having a lognormal yield

return, given the relationship between price and yield of a discount bond.

This in itself does not necessarily represent a breakdown in the risk

management framework, but it must be borne in mind that the localisation of risk

management may not optimise the risk management of the entire firm. Localised

risk management on a desk-by-desk basis may mean that arbitrage may be possible

between the different kinds of products (but essentially the same risks) within

the same organisation, which is clearly not a particularly satisfactory

situation from a risk management perspective.

Models

generally require calibration to market. In the case of complex yield curve

models (for example Heath-Jarrow-Morton), where information on term structure

and its volatility are vital inputs derived from a large amount of caps/floors,

IRGs, swaps and swaptions prices this process can be very time consuming and can

lead to ambiguity. There may be insufficient information to choose between two

calibrations that achieve equal ability to reproduce market prices. In the area

of complex interest rate products it is not unknown for two counterparts to

trade a product and both book a mark to market profit on the transaction, not

only when different models are used, but in some cases with the same model but

with a different calibration.

Once

acceptable and applicable models have been chosen, developed and tested within

the risk management framework, the processing of the contractual risk (as

evidenced by the contract and deal confirmation) into valuations and sensitivity

to input market parameters can be used to generate risk reports.

These risk reports may take the form of aggregate point Greek

sensitivities to risk. Delta,

gamma, rho and vega and so on are workable symbols.

Or it may entail an analysis of the change in the portfolio's value over

a range of scenario moves in the underlying price, or volatility, or some other

parameter of market risk. Generally

speaking the term structure risk could also be considered by bucketing or

compartmentalising the portfolio into smaller portfolios, perhaps based on

principal component analysis of yield curve moves, grouped by aggregate exposure

to some particular point in time. For

example, three months to six months, one year to two year time buckets. These

reports form the basis of understanding the risk profile of the book.

As such, they are vital in the communication of the current level of risk

on the portfolio and as a tool for planning risk exposure for the future and

controlling current risk exposure by the comparison of risk against risk limits.

Risk limits are a dynamic quantity in the sense that they indicate from

management to trading desk the magnitude of particular risks that are desired at

any point in time. It is not

unreasonable to expect that during times of expected market turbulence that

management may in fact decide to reduce risk limits in order to minimise the

impact of any large move upon the firm’s portfolios.

4.

Asset Liability Committees (ALCO)

Generally

speaking a firm-wide risk management committee or ALCO (Asset/Liability

Committee) will be responsible for varying risk limits based on information and

expectations of market conditions in the near future.

They may also vary limits based on the results of trading over a period

of time in which P&L’s versus Risk have been higher or lower than

expected. The ALCO is therefore

responsible for reviewing the performance of portfolios against their risk

limits and in setting and varying those risk limits, in accordance with the

desires of the firm’s risk and return profile.

It is therefore the ALCO that sets the risk return policy of the firm

rather than the individual trader down on the desk.

This is consistent with the firm's most senior management being directly

responsible for setting the risk appetite of the organisation and controlling

that risk appetite according to the required level of volatility in P&L.

This method of analysis of control also has the potential benefit of

detecting problem areas early, and in the setting of risk capital and trader

remuneration according to the risk-adjusted return on the capital assets.

The

ALCO is, of course, entirely reliant on the quality of the information and

analysis with which it is fed. It

is therefore vital that an independent group (independent of the risk taking

process) exists which is responsible for ensuring that the processing of the

information that goes to the ALCO is as complete and accurate as possible and is

independent of those people that the ALCO seeks to manage, i.e. the traders.

It is therefore vital that the ALCO can trust the information it receives

and can expect that that information has been thoroughly vetted and checked by

the independent risk management group. It

is also likely that the independent risk management group will have the

authority of the ALCO to control the risk within ALCO’s framework.

On detection of limit violations it should have authority to reduce the

risk to within those levels set by the asset liability committee without further

reference to anyone else. After

having restored the limit excess it would, of course, look for explanations of

the reasons for that violation. It

is possible that the trading group may apply to the ALCO for an extension on an

ad hoc basis, which after due consideration may or may not be granted.

In addition to the quantitative risk management that the risk management

system kicks out to the ALCO, the ALCO itself will require other forms of

information that relate to qualitative market conditions and also to the

development and progress of new products or new systems within the organisation.

This is so that the ALCO is aware of how the changing risk profile of the

firm fits in with the current market environment, and how any expected changes

may be anticipated and planned for in good time.

Given

the very important task that the ALCO performs, it is clearly essential that it

is composed of experienced senior individuals (with junior front-line

individuals used during the discussion process to inform on the points necessary

to understand the risks). Typically the ALCO will consist of a representative

from the independent risk management group, the trading group, the product

control group, the treasury/funding group and the credit group within the

organisation, along with at least two members of the board responsible for the

firm.

It

is also vital that minutes are kept and that decisions are documented so that

there can be no uncertainty in the future about the required course of action

required and mandated by the asset liability committee.

The committee also needs to consider whether there are risks that are

being run within the organisation which are not covered by a limit and which are

not considered directly by the current risk management framework.

The independent risk management group must continually look for and

research other areas of risk that are inadequately captured within the existing

risk management framework.

How

might a regulator view the ALCO’s potency within the firm?

The regulator looks for an understanding of the trading mandate and an

understanding of risk within all levels of the firm.

This should not only be at the front desk level but through the middle

office into the operations area and also upward through trading management and

ultimately to the organisation's senior management (the ALCO).

One of the clear indications of this need to communicate is the existence

of unambiguous written guidance and policy with regard to each individual’s

role and responsibility within the structure. Other co-factors are the security

of the systems and data and the overall approach to information technology

within the organisation. Clearly

risk management information and ALCO decisions are only useful if they are

communicated accurately and distributed punctually to the appropriate people

within the organisation.

The

independent risk management group, whose role has been described in relation to

the ALCO, also needs to carry out additional tasks such as price testing.

This means the verification of mark to market values and also the

continued validation of risk modelling. This has the twin aspects of ensuring

that positions are realistically valued and that the risk measurement system is

able to practically explain (attribute) P&L movement over time to the

identified risk factors.

The

risk limits that the ALCO sets need to be of a type appropriate to that

particular business line. It is not

inconceivable that limit types may be different from one business to another

within the same organisation. As an

example, we may consider an asset swap type operation where cheap bonds (high

yield) are bought and set up

(hedged) against interest rate swaps to create LIBOR plus assets.

This type of business would not be constrained by pure parallel or

rotational shifts of the yield curve, since the majority of the risk is actually

imbedded in the credit spread (yield differential) between the interest rate

swap and the bond rates. The risk

limits that the ALCO set need to constrain the trading operation in terms of the

risk reward ratio that is actually desired by the firm.

Therefore it should be impossible under the limit structure for the

firm's existence to be jeopardised by a conceivable event (such as has occurred

in the past), unless of course this is desired and mandated by the shareholders.

For some organisations it may be acceptable for the firm (say a small

trading partnership where all the partners are active traders) actually to go

bankrupt in, for example, a 1987 crash scenario.

However, this particular magnitude of risk appetite must be one that is

clearly understood by the firm and taken consciously, rather than something that

happens by accident. Any firm that

operates at this limit will of course be subject to very close scrutiny by the

regulator and possibly higher capital charges, since it is operated in a way

where there is a probability of default much greater than the average.

5.

Historic Data, Scenario Analysis and VAR Reports

In

general, in trying to understand how markets may move in the future we rely on

analysis of past market events. Clearly

this leads to a requirement for extensive, timely and accurate data.

The availability of data in certain markets can be extremely difficult,

for example complex or long dated OTC derivatives products or certain

commodities, and leads to the need to develop other approaches.

If data is available it can be used to create statistical measures of

market variability and the probability of market movements.

We can also use specific market events in the past - crashes like that of

1987 in the equity market or the bond market in 1994 - to look at the effects on

the valuation of our portfolio under such stresses.

We can also look at events like market squeezes where prices are driven

artificially higher. Examples of

these kinds of squeeze include the silver crisis in the early 1980’s and the

copper crisis in 1996. Squeezes

tend to be a function of commodity markets since they are created by a

short-term shortage of a consumable asset. Squeezes do occur in financial

markets such as when short term deposit controls are introduced (e.g. during the

ERM crisis of 1992) or on delivery of an asset such as the cheapest-to-deliver

bond on expiry of a futures contract. These types of analyses naturally give

rise to P&L

profiles

of the book based on a scenario analysis under which historical events are used

as bench markets for the changes in value of the portfolio.

Stress tests are designed to determine events, which may or may not have

occurred in the past that is pathological to the particular portfolio in

question. Stress test are therefore

portfolio dependent. VAR or value at risk type numbers are generated using

historical data to establish the particular magnitude of the likely maximum loss

at some confidence interval. The

common VAR approaches may be one of covariance, Monte Carlo simulation or

historical revaluation. The traditional technique with regard to derivatives

portfolio is to use the point Greek sensitivities with respect to market

movements. These sensitivities

quantify the rate of change of value of the portfolio with respect to changes in

the underlying price, interest rate volatilities etc.

All of these measures naturally give rise to limits which can be set by

the ALCO in order to constrain the business to the desired risk return profile

in the various dimensions of market risk in which the firm wishes to active.

The

use of scenario analysis is to search for particular sets of circumstances which

lead to extreme P&L behaviour within the existing portfolio.

The scenarios which are created are therefore synthetic rather than real

market moves from history. The process of searching for the most pathological

set of circumstances is useful for looking towards possible future events or

circumstances that could lead to that particular scenario occurring.

The effect of scenario analysis is to focus the mind on the possible

results of future events in terms of P&L impact.

Risks

in markets change rapidly, not only from the point of view of the shape of the

portfolios but also the market itself. For

this reason real time monitoring, if possible, is extremely beneficial from the

point of view of managing risk and ensuring that the risk stays within limits.

Regulators will generally look for daily risk reports as an absolute

minimum standard.

The

overall value at risk (VaR) number provides an indication of the general level

of risk within the organisation on a big picture basis. Generally speaking as a

risk management tool in isolation it has serious flaws.

These flaws stem from the necessary approximations (in terms of the

stability of covariance matrices, or that future events resemble history) that

have to be made in estimating the VAR. VAR in itself is then potentially a

useful tool for high-level observation of risks and returns, monitoring capital

allocation, and also the overall regulatory capital requirement, but it is

dangerous to use in isolation without the consideration of stress tests,

scenarios and Greek sensitivities. This

is because VAR is the aggregate and probable, whereas the P&L occurring

under a stress test are in some sense an idea of the maximum possible downside

rather than a probability weighted and correlated downside.

Regulators will also be looking to see how the firm’s policy handles

losses, from the point of view of stop loss practice and the cutting of

positions. They will also be interested in how it handles the problem of

liquidity or funding against illiquid positions.

This is because it is often difficult

to see from balance sheet figures that illiquid positions exist. For example an

illiquid bond position hedged with futures can suffer an adverse movements in

futures prices, this can lead to margin calls which can not be met from existing

banking lines, and the illiquid asset cannot be realised due to its lack of

marketability.

6.

Liquidity Adjustment

Liquidity

adjustments are the changes in evaluation of the market value of positions due

to their abnormally large or abnormally small size.

Let us take for example a bond position that exists in twice the normal

market size of £10M on the bid on £10M on the offer.

Then the mark to market price would need to be adjusted to reflect the

market impact of doing two market size trades, back-to-back, in order to flatten

the position. This liquidity

adjustment in this particular case may take the form of using half the bid/offer

spread below the bid price in order to establish the liquidation value.

Other estimates could be used based on market information e.g. monitoring

price movements (volatility) as a function of market transaction size. The

purpose of this liquidity adjustment is to establish the effect of illiquidity

within the portfolio. As an extreme

example we could imagine a portfolio of Gilts (UK Government Bonds) which had a

value of, let us say, £100M, compared with a portfolio of long-dated illiquid

derivative positions, options perhaps on single emerging market stocks.

This could also be valued at $100M (not allowing for liquidity).

Clearly, in the event that the firm needed to liquidate positions to

fund, shall we say, a margin call on one of its other books, then the Gilt

portfolio is much more likely to be sold quickly for a price close to its

balance sheet mark to market value. It

would be highly unlikely that the illiquid positions in derivatives would be

realised at anything like the same speed, or indeed at a price that was anything

remotely connected to its mark to market valuation.

This liquidity adjustment is therefore vital if the figures within a

firm's balance sheet, P&L and risk management reports are going to be

comparable on a like-for-like basis and one is therefore sensibly able to look

at the asset/liability mix of the firm.

7.

Provisions

Within

the organisation evidence of consideration of model risk and inadequately

captured (or poor) information can be demonstrated and the problem mitigated

through the use of both provisions

and reserves. These provisions

provide for unexpected or unanticipated costs in the future associated with

managing the risk which are not currently priced in to the current risk

management framework. For example,

it is possible to take into account some of the model risk by using a provision.

It might also be appropriate that P&L’s or expected P&L’s on

long-dated positions may actually be taken as provisions to be released over the

life of the instrument or indeed at the end of the life of the instrument when

the total hedging costs are known with certainty.

Such an approach would avoid the classic symptom of long-dated products

accruing immediate day one P&L which is used to pay bonuses in the very

early days of the risk management process prior to the realisation of the actual

cost of running the risk position to maturity. This lack of foresight by some

firms has lead to difficulties in the past.

So-called star traders have accrued large profits and large bonuses in

the early life of the book only to disappear to another organisation and do the

same, leaving a risk management problem in the later period of the life of the

product which in essence all but consumes (in some cases more than consumes) the

total P&L booked upfront.

8.

Internal Controls

Let

us consider other internal control issues that regulators inspect within firms.

Indications of good risk management within a firm are clear levels of

authority, for example, escalation events which trigger progressive involvement

of a more senior individual along the chain of command based on the magnitude

of, for example, a P&L move or risk changes.

A powerful indication of strong internal controls is also a sign-off at

each level of authority based on the individual assuming and taking

responsibility for any change in risk profile up to his particular authorised

limits. The new product procedure

in which new products or risk types are introduced into the firm is an important

area from a risk management / internal control perspective.

This is because new products frequently have unusual risk profiles and

may be of a form which cannot be readily booked or controlled within the

existing risk management framework of the firm.

Any well risk-managed firm will therefore have extensive new product

procedures, which will identify what is a new product or new type of risk.

They will also have appropriate techniques (e.g. review, sanity checks)

of sign-off at each level and in each department within the organisation to

ensure that these products can be handled safely.

If they cannot be handled safely within the existing framework then they

are handled in a way, which minimises their risk to the firm in terms of their

total amount of volume or total number of transactions.

This could be particularly true where they need to be handled outside the

main system, for example on spreadsheets, where a limit on the number of

not-in-system (NIS) or spreadsheet trades is actually imposed upon the

organisation by the risk controllers. If

these products become more popular, the spreadsheet type procedures may be

transferred into the main risk management system with the appropriate modelling,

thereby freeing capacity for other new products to be handled in an NIS fashion.

This tends to be a natural migration for new products which become

mainstream.

The

role and involvement of internal audit within a firm is of great interest to

regulators. The reason for this is

simple. The regulators realise that

even the smallest firm is a relatively complex animal which cannot be completely

understood from relatively infrequent visits nor remote monitoring by regulatory

reporting. Thus the internal audit

function has the opportunity to review and monitor the activities of a firm on

an ongoing basis in a way which is much more intimate than any regulator could

hope to achieve. It is the strength

of the internal audit group along with the comprehensive nature of their review

that forms a major comfort for any regulator.

The acid test however is the action of the management of the firm in

respect of recommendations made by the internal audit group in their report. It

is pointless for the internal audit to raise concerns or highlight weaknesses

within the organisation if their recommendations are ignored or their

implementation is delayed over a long period of time.

The staff experience within internal audit is also a factor from a

regulator's perspective.

Other

risk management factors that regulators take into account include the experience

and competence of dealing, middle office, operation and management staff.

This includes their number and experience and also their ability to work

together and to understand the framework within which they are asked to

co-operate. This naturally leads to

an assessment of the maturity of the whole system in terms of the elimination of

teething problems and resolution of areas of uncertainty within the risk

framework, coupled with the day to day handling of the workload and any

problems.

From

a regulatory standpoint the expectation would be that management would

continuously review and monitor the risk management process using techniques of

feedback loops (for example, deliberately inserting off-market prices or dummy

deals and seeing if they are spotted) and critical analysis of what is and is

not working. It might be

appropriate to insert test conditions within the framework on an anonymous or

confidential basis to assess the ability of the system to detect and correct

errors. It therefore necessitates

the critical analysis by management of what is working and what is not and

remedial action based on the measurement of that process.

In summary, the risk management process requires continuous review and

detective action by management in order to ensure a robust and workable

framework and any sensible regulator will expect to see management doing exactly

that.

9.

Practical Investigations into RM Practices

When

looking at a firm from a regulatory standpoint and examining its risk management

capability, it is important to consider the size and complexity of the

organisation. Large financial trading entities will typically have traders

located overseas who book positions to a parent entity (for example, the London

base parent organisation), global books so that positions are managed on a

world-wide basis with the risk management being passed around the world from

time zone to time zone, but actually legally remaining on the books of the

London entity. They will frequently have complex derivatives, perhaps even ones

that depend on multiple assets; maybe combinations of FX, equity indices and

interest rates. They will typically have many portfolios for trading in many

different types of asset class (FX, equity, interest rates, commodities, credit

etc.) not only cash trading but also in derivatives. They will typically trade

twenty-four hours per day in all products (although out of hours volume are

generally very low, e.g. FTSE in Asian time).

As a consequence of their global nature they will frequently have long

reporting and communication lines and almost certainly there will be

considerable office politics involved in the way the firm is managed and run.

The

political aspects cannot be underestimated since it is possible that good risk

management practices can actually be undermined by the political desires or

aspirations of individuals within the framework.

From a risk management perspective something, which is possibly good for

the firm, may in fact be bad for an individual or for a part of a business

group, and of course this creates a political problem.

Large firms frequently have multiple systems that have been developed

over time or inherited from firms that have been merged to form the large

entity. These multiple systems

bring with them their own problems from the point of view of compatibility and

the lack of “seamlessness” with which they communicate with each other; it

is not unusual for systems to operate in such a manner that a large amount of

reconciliation between systems and manual rekeying of data is required so that

the risk management process can operate at all.

Small

firms, having a limited number of personnel, can be relatively simple to

understand but may suffer from the problem of staff having too many

responsibilities, possibly conflicting. Generally

speaking small firms have small budgets for information technology, and this may

mean that the systems are underdeveloped or simplified, or are in fact custom

systems which have not been enhanced for the current business situation.

Small firms tend to be specialists in one area, and so this means that it

can be easier to understand the

business flows and the interactions with the clients and markets.

Generally speaking they are also in one place and this makes

communication and inspection easier from the regulator's point of view.

They have the added advantage that the senior individuals within the firm

are probably involved in the front line of the business.

This involvement of senior people at business level can give small firms

an advantage over a large firm, but can pose the problem of objectivity or

segregation of duties. Small firm

may be more vulnerable from a capital point of view since they are likely to be

less diversified and run on smaller capital excesses, close senior management

involvement at front office level can thus be even more important.

How

then does a regulator approach the problem of examining a firm's risk management

practices? This is a difficult

task, but not completely impossible. An

examination will generally involve not only a practical investigation of

systems, personnel and reports, but also following the flow of risk information

through the organisation and assessing integrity and understanding in different

areas such as middle office, back-office, risk group and management.

One

of the techniques is to ask the same or similar questions in each area of the

firm on the same issues and compare the answers given at each level.

This allows some indication of the consistency and understanding of the

risk management approach across the organisation, particularly on adequacy of

risk management training and internal communication.

Another

technique to use is to ask for information on the spur of the moment, without

notice and without waiting for that information to be prepared especially. This

gives some indication of the availability, timeliness and completeness of the

information available within the firm. This

will give no indication of the accuracy but it will give an indication of how

promptly information is available to staff, especially when compare with their

stated work practices. Another important factor to examine is whether the risk

parameters used in the risk management process actually explain the profit and

loss profile achieved over time. Thus

movements in the risk factors such as market level or interest rate when

multiplied by the portfolio risk sensitivity parameters such as Delta, Gamma and

Vega should actually match up with the achieved profit and loss from

revaluation; if they its does not there should be a suitable explanation as to

how the unexplained profit and loss arises, for example from commission trades,

intra-day trading or unquantified risk factors.

This area is generally known as actual versus forecast P&L, and the

cleaning of actual P&L to take into account non-market risk P&L such as

commissions or funding is called P&L cleaning.

Clean P&Ls should in fact be well explained by the risk parameters

used in the risk management process. If the level of explanation is below 90%,

for example, then it could be concluded that there are significant risks that

are not being properly measured or controlled within the risk management

framework, and new risk factors may need to be introduced.

Another technique that regulators can use in their practical

investigation into the risk management practice of firms is to attend risk

meetings. Regular risk meetings can

be an indication of a positive and collective (team) approach to risk management

within the firm. In these meetings

it is important to assess how risk is discussed and to what extent each

individual within the group meeting has access to and understanding of the same

level of risk detail. Are the meetings democratic, are sensible decisions taken

and are they followed up?

Other

investigations include discovering whether all the risk positions on the firm's

books are actually captured within the risk management system.

Frequently there will be trades outside of the main system (not-in-system

trades) that are commonly handled in a spreadsheet environment.

It is important to assess that these NIS trades do not pose a serious

threat to the risk management integrity of the firm due to potentially a lower

standard of measurement and analysis than might ordinarily be applied within the

normal risk management framework. This

is particularly true with regard to the modelling of risk and the estimation of

model error. Equally the quality and conservative nature of the input parameters

and the determination of market values may suffer.

The

internal audit department plays a vital part in the risk management process.

This group potentially has the opportunity to examine and review the risk

management process over a long period of time with a far greater degree of depth

than any regulator could hope to achieve. From

a regulatory standpoint therefore it is important to assess how the internal

audit group are viewed internally. The

firm's attitude towards its internal audit may well determine its preparedness

to act upon recommendations made by that group.

Thus internal audit reports and their recommendations, and more

importantly the response of management to those recommendations, can be a strong

indicator of the preparedness of the firm to adapt and change its risk

management practices in response to positive criticism internally.

When

traders are physically located offshore, or they institute trades on behalf of a

regulated entity in another country, it is important for the regulator to

understand and to some extent verify the controls which are imposed upon them by

the management of the firm to which positions are booked. Generally it is

considered more difficult to monitor and control activities far away from the

parent organisation, especially when the trading occurs overnight. Given the

responsibility management has to the financial integrity of its firm, it must

show clear control of its overseas traders, even when their are no staff

physically present in the parent entity to which risk is being booked.

The

control of risk-taking by individuals located away from the parent company is an

important factor in the overall risk management of a firm.

This is particularly true when the traders are active at times when

London or home-based management is asleep.

It is therefore vital that suitable controls are in place to limit the

amount of unauthorised or extreme risk assumed by traders located offshore.

So in any practical investigation of risk management within a firm it is

important for the regulators to understand the nature of any offshore trading

and the mechanisms in place to limit that trading to the desired risk return

appetite of the management. It is

also important that these people located offshore are aware of the possible

restrictions placed on their activities by the requirements of their parent:

not only from their business management point of view but potentially

from a regulatory perspective as well. This may well cover conduct of business

as well as capital adequacy.

Further

techniques that a regulator may employ to assess risk management include the

investigation of the same issue in different areas of the organisation.

An example is asking the question where the greatest risk lies to the

trader, the middle office, the independent valuation people,

trading management and senior management.

In comparing and contrasting these answers it is possible to build up a

picture of potential risk management weaknesses.

The firm's attitude towards financial provisions and reserves

(and who decides them) can also be indicative of the sophistication of the risk

management process. It is also

indicative of who controls and has authority over the risk management process,

since the providing of provisions in general reduces the profitable P&L

impact of trading and therefore tends to dilute the perceived returns generated

by the trading operation. Within

many organisations traders are kings and therefore any individual who is able to

provision against their profits is in a position of power within the risk

management framework. An

independent risk management group who has this capability is clearly more than

just a puppet. The ability of the

risk management group to provision traders' profits and indeed to recommend or

implement changes in risk profile is one of the distinctive hallmarks of a truly

independent and potentially effective risk management group. Details of their

actions in the past in this respect are powerful supporting evidence of their

potency.

Any

regulatory review of risk management within firms is going to take a great deal

of time in the area of mark to market. As

mentioned previously the mark to market is the cornerstone of the risk

management of the firm and any error in this area can lead to serious errors

throughout the whole system. The

firm’s approach and attitude towards mark to market and the seniority of

individuals involved in making mark to market assessments is a strong indication

of the robustness of the risk management framework.

Generally

the regulator will look for VaR (Value at Risk) type analysis, scenario analysis

and stress tests of a market event type in order to ascertain whether the firm

has a sufficient variety of techniques and methodologies to assess risk.

The regulator will invariably be looking for limits on all types of risk

activity which designate the maximum size of a particular exposure in a risk

factor. They will be looking for

concrete evidence that these limits are taken seriously and acted upon, that

these limits are set in a way that the risk appetite of the firm is adequately

described, and that the limits themselves interact with the trading size of the

firm. For example, limits that are

many times the current market risk positions or even many times the largest risk

that has ever been taken in that risk parameter may be viewed as completely

unconstraining from a risk management point of view, and possibly indicate a

weak attitude to the setting of limits and perhaps avoidance of the issue by

management. In these circumstances

this is not really a limit but merely the idea of risk always being within the

limit, sensible or not. The

regulator would expect these limits to be tested in the normal activities of the

firm and the demonstration of risk being managed and constrained within limits

be obvious to the observer.

The

regulator's investigation into the risk management of a firm will invariably be

based largely on questions and the analysis of answers and documentary evidence

received. These questions seek to indicate and verify the understanding and

attitude towards the risk management process.

It would not be unusual, for example, to ask the senior executive officer

for his views on where the weaknesses lie in the existing risk management

framework of his firm. The answer

that there are no weaknesses would clearly be met with a certain degree of

surprise. Since, generally

speaking, any framework or system will contain elements which are slightly less

robust than others. In general, it would be expected that policy would be to

improve these areas over time.

In

talking to an equity derivatives trader it would not be unreasonable to ask a

question along the lines of what would be his maximum loss under a 1987 equity

market crash scenario. He may be

able to answer very readily due to the use of scenario analysis but one needs to

probe further in terms of whether his answer includes the probable changes in

volatility, or indeed the underlying hedge illiquidity that would probably

follow such a market event. Consideration of these factors makes clearer the

possible magnitude of loss under such a scenario that may not be included in a

simple model of a market move, but more importantly demonstrate the extent that

the trader has thought about the limitations in such scenario analysis.

In

talking to an independent risk manager one may ask questions, for example, about

bid/offer spreads on illiquid positions. This

poses a particularly difficult problem since a mark to market is necessary in

order to feed the risk management and P&L systems.

For truly illiquid products it is of course impossible to obtain a market

valuation. Therefore other techniques must be used to bound (in the mathematical

sense), in a conservative manner, the possible values of this risk. For example,

this could be achieved using the technique of limiting the value of an American

option to intrinsic value or exercise value. Needless to say, the regulator is

looking for a proactive and conservative approach to the problem of valuation

and would view a general lack of understanding of the problem in this area as

potentially very serious.

The

person involved in verifying market prices may well be asked how they verify

long-term volatility or correlation in relatively illiquid markets like long

dated equity derivatives. For

example, in pricing long dated call options on an equity underlying, quantod

(i.e. currency protected) into another currency, it is necessary to establish

the forward price of the underlying asset, its volatility, the FX volatility and

correlation between the asset and the FX rate.

All of these factors are very difficult to obtain from the market and

indeed there is a further problem over which time horizon to chose for their

measurement. This task is hard

enough for the trader and so when someone other than the trader is checking the

prices it is all too easy for that person to rely on the trader's market value.

This has clear implications for a breakdown in the risk management

framework whereby the trader may in fact deliberately input optimistic prices

for his or her positions. This appears to happen all to frequently.

A

further question to ask the SEO is to ask him to quantify the loss that would

occur if, for example, a repeat of the 1994 bond market crash occurred.

More interesting would be to follow up with the question as to whether

this size of loss was within the trading mandate or risk appetite of this firm.

He may well regard this event as unlikely, but it is a possibility.

Therefore the firm needs to be comfortable that the size of loss that this

possibility entails, no matter how improbable it is, is in fact within the

realms of possible outcomes that the firm has deemed acceptable.

It

is also a useful approach to ask a trader what he would do if he was asked to

quote a price on something he had not traded before although which was similar

to some of the type of products already on his book.

This to probe the tricky area of what constitutes a new product or a new

risk, and seeks to examines how closely a trader's product mandate has in fact

been defined. It also attempts to examine his or her preparedness to trade a new

type of risk or product without that risk or product first being examined by the

new product process and approved by all areas of the firm including operations,

compliance and management.

The

regulator's overall view therefore is that good risk management is good business

and more importantly it should work rather than be a sop for the regulators.

To remain effective, any risk management system on a large scale needs

continuous review and challenge to stay current.

It is the firm and not the regulator that has to drive this process,

since it is impossible for any regulator to have the detailed, continuous

knowledge of its operation that the firm itself must have to operate.

Risk management involves the entire organisation and there is no element

that is not included within its framework.

In examining risk management within the firm the regulator needs to test

and challenge the effectiveness of internal controls and understanding, not

through detailed rules but through practical demonstrations of acceptable and

workable minimum standards within the firm, appropriate to that particular

operation. The firms that demonstrate good, proactive and dynamic risk

management should be encouraged and rewarded by the regulator in terms of a less

onerous regulatory burden, both through fewer visits and lower regulatory

capital charge. Lower risk capital

charges are therefore a reward to be won and not a right to be demanded. Indeed

a poor performance in a voluntary risk management review may result in higher

rather than lower charges for the complacent and poorly managed firm.

10.

Summary

In

this brief paper I have outlined some of the qualitative criteria upon which a

statutory financial regulator may review risk management practices within

regulated firms. It has outlined some of the possible areas of focus and the

some of the perspectives that a regulator may place upon certain aspects of

financial risk management. It has also discussed some of the techniques and

methodologies of review. I have

considered how risk management needs to be viewed in terms of the particular

firm and risk being considered since it is must be viewed situation specific. It

strongly argues in favour of “risk not rules” and encourages a framework

where firms are encouraged organically (not taught or lead) to better risk

management practices through regulatory incentives such as less intrusive

regulation and lower regulatory capital charges. It strongly argues that good

risk management is good business and both regulator and regulated have a strong

mutual interest in continually striving to achieve it.

REFERENCES

1.

Handbook of risk management and analysis

- editor Carol Alexander - John Wiley

2.

Guide to risk management: Glossary of

terms - Chase/Risk Magazine

3.

Risk measurement and capital requirements

for banks: Patricia Jackson, Bank of England Quarterly Bulletin, May 1995

4.

VaR: pushing risk management to the

statistical limit. R. Beckstrom, D. Lewis & C. Roberts, Capital Markets

Strategies

5.

Public disclosure of market and credit

risks by financial intermediaries, September 1994, Peter R. Fisher. Report

of the Eurocurrency Standing Committee

6.

SFA Board Notice number 414 (Credit

Derivatives) - Securities & Futures Authority - London

7.

Framework for the supervisory information

about the derivatives activities of banks and securities firms. Joint report

by the Basle Committee on Banking Supervision and the Technical Committee of the

International Organisation of Securities Commissions (IOSCO); May 1995

8.

Framework for voluntary oversight.

Derivatives Policy Group (DPG), March 1995

9.

The implications for securities

regulators of the increased use of value at risk models by securities firms.

Technical Committee of IOSCO, May 1995

10.

Risk management guidelines for

derivatives, Basle Committee on Banking Supervision, July 1994

11.

Operational & financial management

control mechanisms for OTC derivatives activities of regulated securities firms.

Technical Committee of IOSCO, July 1994

12.

Proceedings of the 3rd annual conference

on risk management “Risk measurement and management technologies conference”

, November 1997, Chicago Federal Reserve Bank

13.

Public disclosure of the trading &

derivatives activities of securities firms. Joint report by the Basle

Committee on Banking Supervision and the Technical Committee of the

International Organisation of Securities Commissions; November 1995

14.

Key risk management issues for derivatives. London Investment Banking

Association, July 1996

15.

Risk management and control of

derivatives. Touche Ross, November 1994

16.

Global institutions, national supervision

and systemic Risk. Report by the G30, July 1997

|